How do languages balance the richness of their structures with the need for efficient communication? To investigate, researchers at the Leibniz Institute for the German Language (IDS) in Mannheim, Germany, trained computational language models on a vast dataset covering thousands of languages.

They found that languages that are computationally harder to process compensate for this increased complexity with greater efficiency: more complex languages need fewer symbols to encode the same message. The analyses also reveal that larger language communities tend to use more complex but more efficient languages.

Language models are computer algorithms that learn to process and generate language by analyzing large amounts of text. They excel at identifying patterns without relying on predefined rules, making them valuable tools for linguistic research. Importantly, not all models are the same: their internal architectures vary, shaping how they learn and process language. These differences allow researchers to compare languages in new ways and uncover insights into linguistic diversity.

In their study, the researchers trained language models on more than 6,500 documents in more than 2,000 languages, covering almost 3 billion words. The texts included religious writings, legal documents, movie subtitles, newspaper articles, and a lot more.

The researchers estimated how difficult it is for the computational models to process or produce text, using this as a measure of language complexity. The work is published in the journal PLOS Complex Systems.

“We trained very different language models on this textual material,” says co-author Sascha Wolfer. “Some simple models only consider the last two words, which limits their ability to capture grammatical patterns over long distances. Others, such as transformers (similar to ChatGPT), use advanced mechanisms to analyze complex dependencies and uncover richer linguistic structures.”

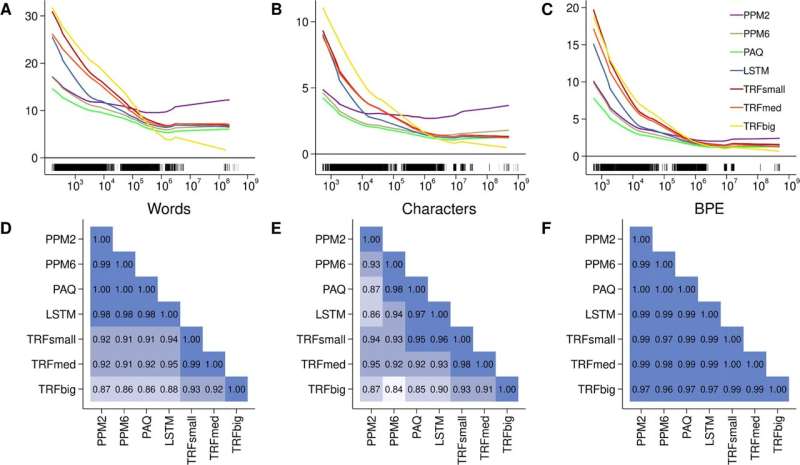

Surprisingly, the results were consistent: despite significant architectural differences, the models produced remarkably similar rankings of language complexity.

“If one language is harder to process than another for one model in one corpus, this relationship holds across other models, text types, and even if the model operates on a different symbolic level, e.g. characters instead of words,” explains co-author Peter Meyer. “These findings suggest that the results may not only reflect computational effort but could also offer insights into the intrinsic complexity of human languages.”

Why, then, would some languages evolve to be more complex, given the increased effort required for processing? A key finding of the study may provide an answer: there is a trade-off between complexity and efficiency. Languages with higher complexity tend to produce shorter texts to convey the same content, reflecting a compensatory mechanism where increased structural intricacy is offset by greater efficiency in communication.

“So maybe the extra effort required to learn a complex language has its benefits,” suggests Alexander Koplenig, lead author of the study.

“Once you’ve mastered it, a complex language might offer more options to express yourself, which can make it easier to convey the same idea using fewer symbols. This is relevant, because we also show that this trade-off is shaped by the social environments in which languages are used, with larger communities tending to use more complex but more efficient languages.”

So one could speculate that in large societies, institutionalized education might enable greater linguistic complexity by providing systematic and formalized language learning, which supports the acquisition and use of intricate linguistic structures. At the same time, the importance of written communication in larger societies may create pressure for shorter messages to reduce costs for production, storage, and transmission—such as book paper, storage space, or bandwidth.

“This combination—education enabling complexity and practical needs driving efficiency—could explain why languages in larger communities evolve the way they do,” Koplenig continues. “Testing this speculative hypothesis is a fascinating direction for future research.”

More information:

Alexander Koplenig et al, Human languages trade off complexity against efficiency, PLOS Complex Systems (2025). DOI: 10.1371/journal.pcsy.0000032

Provided by

Leibniz Institute for the German Language

Citation:

Study finds complex languages may be more efficient for communication (2025, February 4)

retrieved 4 February 2025

from https://phys.org/news/2025-02-complex-languages-efficient-communication.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.